Is AI taking over the world? If you can write prompts, you can take over AI.

The AI revolution is in full swing and the two tech giants, Google and Microsoft, are duking it out in the race to dominate the AI market. This week, the rivalry heated up as both companies made striking announcements regarding their AI-empowered services.

TLDR: what happened in the tech world?

On Monday, February 7, Google introduced its AI-powered chatbot, Bard. Although it is set to be publicly released in the coming weeks, it is already perceived as the “ChatGPT killer”.

On Tuesday, February 8, Microsoft announced the revamp of its search engine Bing and web browser, now powered by a refined version of OpenAI’s successful GPT3.5 model. The move signals a disruption in the prolonged search engine dominance by Google.

How is Google Bard AI different from ChatGPT?

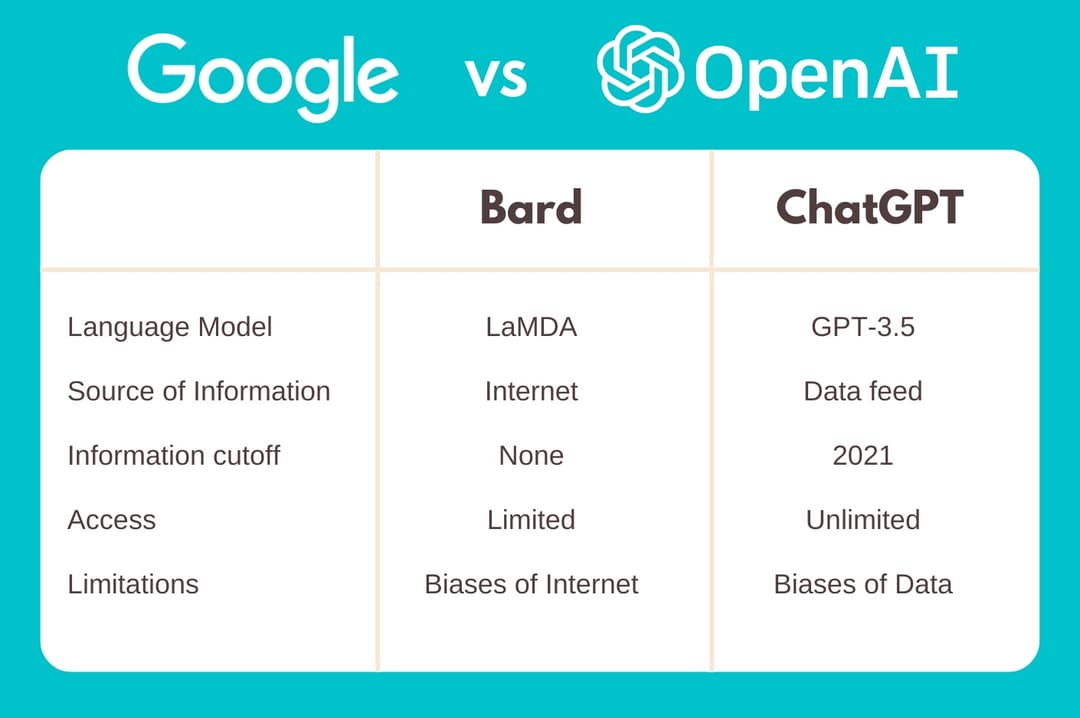

ChatGPT is doing pretty well. Should we look forward to Google Bard AI? The answer is ABSOLUTELY YES. Google Bard AI is highly anticipated as it is able to take real-time events into account when generating its responses. Google’s CEO Sundar Pichai introduced the Google Bard AI chatbot in a blog post, highlighting the chatbot’s capability to access the internet for the most recent answers to users’ questions.

On the other hand, OpenAI’s ChatGPT is a frozen model trained on data before 2021Q4, meaning its response is limited to information prior to this training cut-off date and it is unable to access the internet.

At the moment, ChatGPT is the state-of-art AI chatbot of our times. Upon the public release of Google Bard AI, it will sweep up a significant part of the market in no time. Let’s get to know about Google Bard AI upfront.

What do we know about Google Bard AI?

Google Bard AI is powered by LaMDA (Language Model for Dialogue Applications). As the name suggests, LaMDA is designed specifically for conversational AI. While most language models are trained on large text corpus, LaMDA was trained on dialogue, which forged its heightened ability to interpret context and be sensible. LaMDA is built on Transformer, a neural network architecture invented by Google Research and open-sourced in 2017. Interestingly, OpenAI’s GPT, amongst many recent language models, was also built on Transformer.

Image: Comparison between Google Bard and OpenAI ChatGPT

What does this mean to us?

The skyrocketing adoption of AI once again stirred up public concerns in the future of human life. How does the widespread integration of advanced AI shape the development of mankind? Is AI taking over the world? Believe it or not, if you can write prompts, you can take over AI.

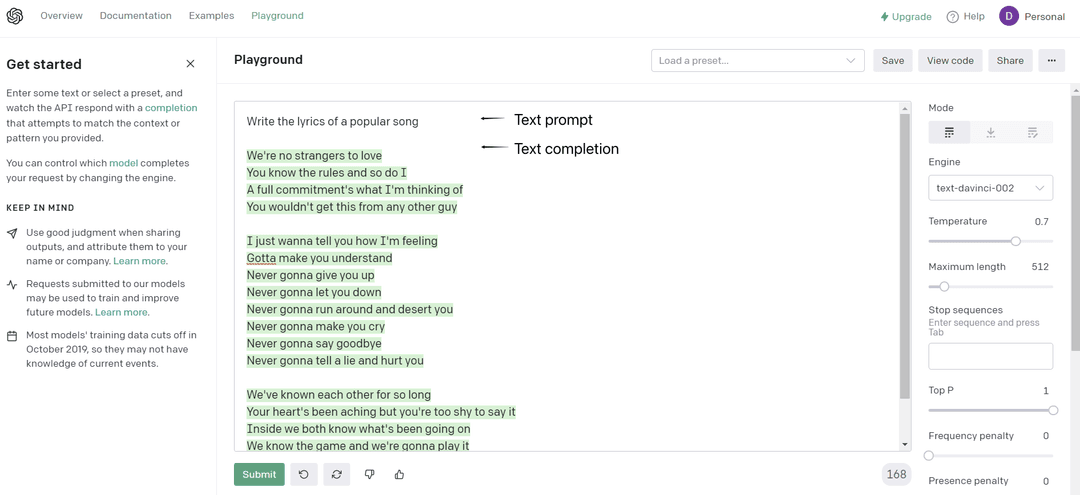

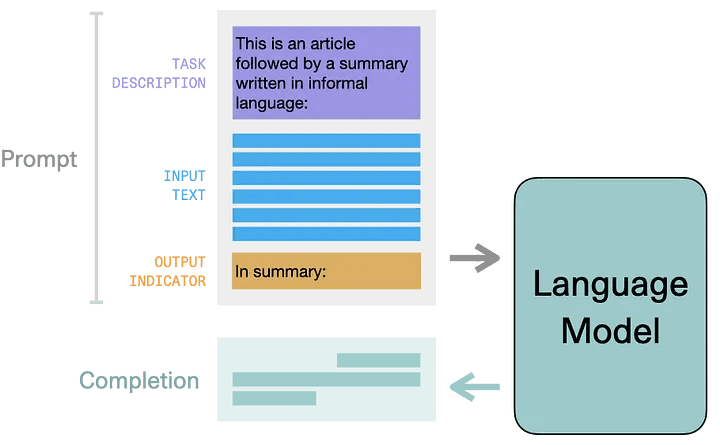

Image: OpenAI Playground showing text prompt and text completion

If you have not already noticed, modern day language models like ChatGPT operate on a “text in, text out” basis. The input is called a text prompt and the output is the text generated. Given that the model output quality is heavily dependent on human input quality, there has been a growing buzz around prompt engineering.

What should we know about this trend?

Before we delve into the emerging world of prompt engineering, let's take a moment to set the stage.

To clear up the terminology, AI is the driving force behind the creation of self-sufficient machines that mimic human behavior, while Machine Learning (ML) is a discipline in computer science. ML uses algorithms to build predictive models that solve problems. In layman's terms, it involves a computer collecting data, recognizing patterns, and making predictions.

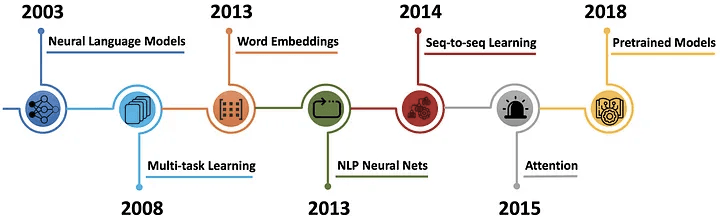

One of the most common fields of AI is Natural Language Processing (NLP), which teaches computers to understand and interpret human language. Language Models are the key component of NLP. LMs analyze text data and predict the next text output by estimating the probability of text sequences. Large data scaling has led to the emergence of large language models, boosting the efficiency of NLP tasks.

Image: modern history of NLP development

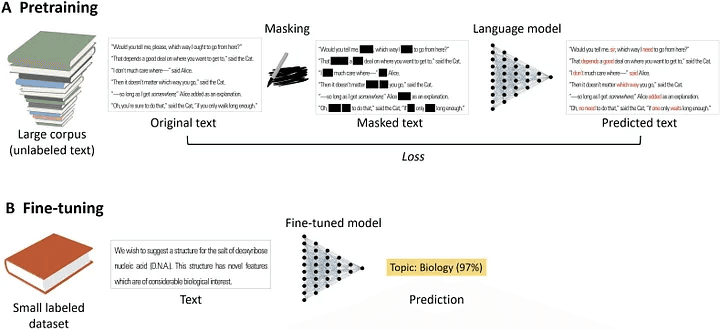

Since the open-source release of Google Research's Transformer, this architecture has become the backbone for many pre-trained and fine-tuned language models, such as BERT, GPT, and LaMDA. These models are trained on massive amounts of text data to learn general language representation and fine-tuned for specific tasks using a small custom dataset known as a "prompt". This process is referred to as "prompt-based learning".

Image: Flow of a pre-trained and fine-tuned language model

Image: Input-output flow of text prompt

What is prompt engineering?

It is the art of instruction - the key to effective communication between humans and machines. The goal is to clearly convey your requirements or questions in a way that optimizes the answers you receive.

Imagine a conversation that flows seamlessly, with every word and sentence chosen strategically and intentionally. Using logical, clear, and precise language, prompt engineering aims to:

Bridge the gap between thought and expression

Ensure the instruction is received to the fullest extent without any misunderstandings

Eliminate any potential for the instruction to be lost in translation

Prompt engineering might revolutionize the way we communicate.

Why should we know about prompt engineering?

The integration of AI into society creates new opportunities to increase productivity by automating repetitive tasks. Picture AI-based tools as your subordinates, the better you are at setting clear and precise instructions, the more efficient they will be at carrying out tasks. In that sense, prompt engineering might soon become a skill as common as using Microsoft Office - can you excel your job without Excel?

In conclusion, as AI plays an increasingly significant role in our lives, it's more important than ever to understand how to harness its power. That's why learning prompt engineering is such a vital step in taking control of AI and using it to drive positive change in our world. Let's jump in and seize the AI revolution!

References:

Su, P., Vijay-Shanker, K. Investigation of improving the pre-training and fine-tuning of BERT model for biomedical relation extraction. BMC Bioinformatics 23, 120 (2022). Full Article

Liu, Pengfei, et al. “Pre-Train, Prompt, and Predict: A Systematic Survey of Prompting Methods in Natural Language Processing.” ArXiv:2107.13586 [Cs], 28 July 2021 Research Paper Portal